Google DeepMind has released TxGemma, a set of open-weight AI models designed for therapeutic development. These models, based on the Gemma architecture, are trained to analyze and predict characteristics of therapeutic entities during drug discovery. 💊

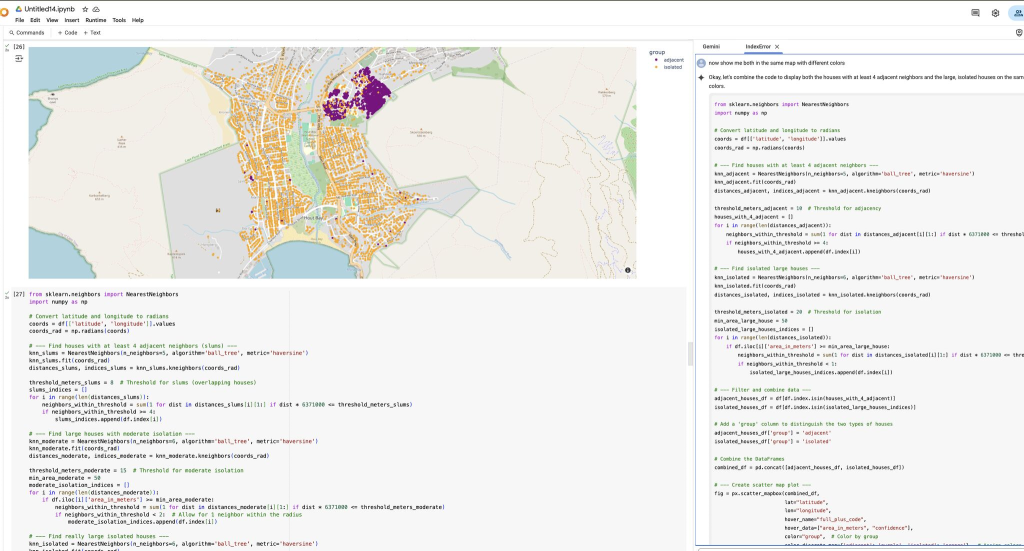

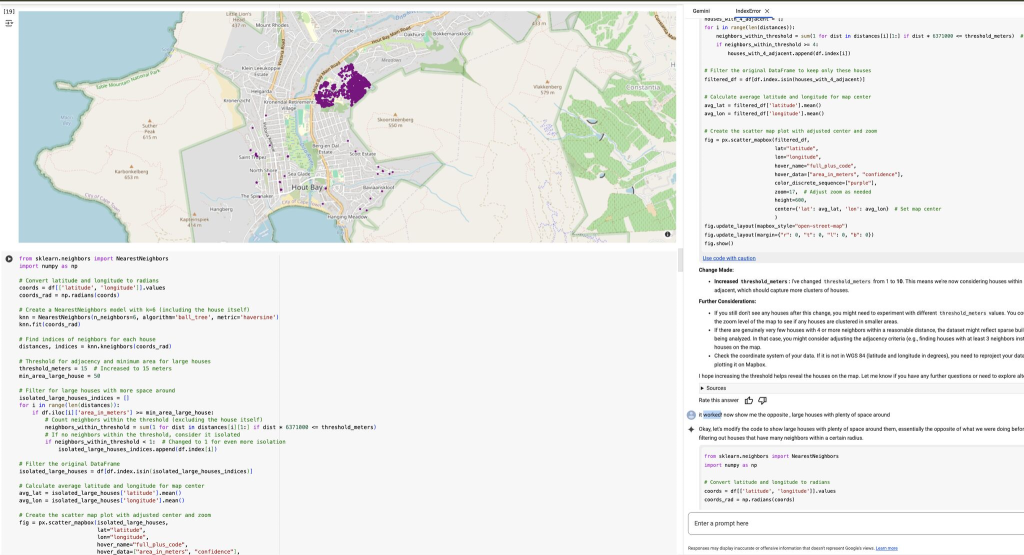

The release includes ‘chat’ variants (9B and 27B) that can engage in dialogue and provide explanations for their predictions. Additionally, Agentic-Tx demonstrates the integration of TxGemma into an agentic system for multi-step research questions. 🤖

A fine-tuning notebook is available for custom task adaptation:

Execution is possible on a free T4 GPU after license acceptance and Hugging Face token provision:

If you encounter issues with the provided fine-tuning notebook, you can check my pre-configured Colab notebook:

Further resources:

- Inference (TxGemma-Chat) notebook: https://github.com/google-gemini/gemma-cookbook/blob/main/TxGemma/%5BTxGemma%5DQuickstart_with_Hugging_Face.ipynb

- Agent (Agentic-TX) notebook: https://github.com/google-gemini/gemma-cookbook/blob/main/TxGemma/%5BTxGemma%5DAgentic_Demo_with_Hugging_Face.ipynb

- Detailed information: https://developers.googleblog.com/en/introducing-txgemma-open-models-improving-therapeutics-development/

Credit for this release: Shekoofeh Azizi and other contributors. 🎉